Artificial intelligence is no longer limited to static models that generate answers on demand. The rise of AI agents — systems powered by large language models and other AI technologies that can act autonomously to perform tasks, make decisions, and interact with digital environments — marks the next phase of AI adoption. These agents can book meetings, handle customer service queries, draft business reports, search the web, execute workflows, and even integrate with enterprise systems.

But with this new capability comes a new category of security risks. Unlike traditional AI models that simply respond to prompts, AI agents can take actions — sometimes across networks, applications, or databases. If compromised, they could leak sensitive information, be manipulated into performing malicious tasks, or be exploited by attackers as an entry point into larger systems.

Why Securing AI Agents Is Critical

The stakes are rising as businesses, governments, and consumers integrate AI agents into daily operations:

-

Data Exposure: AI agents often have access to sensitive data — customer records, financial information, or proprietary business documents. Without proper safeguards, attackers can exploit vulnerabilities to exfiltrate that data.

-

Autonomy and Trust: Unlike human-controlled systems, AI agents may act without constant oversight. A small manipulation (like a crafted prompt or injected malicious command) could have cascading consequences.

-

Emerging Attack Surface: New threats such as prompt injection, model poisoning, and adversarial manipulation are being actively tested by malicious actors.

-

Compliance Risks: Mishandled data by AI agents can lead to violations of privacy regulations such as GDPR, HIPAA, or the upcoming EU AI Act, resulting in heavy penalties.

The Changing Threat Landscape

Traditional cybersecurity focused on securing networks, devices, and users. Now, organizations must treat AI agents as first-class assets within their security perimeter. For example:

-

A customer service chatbot could be manipulated into revealing confidential account details.

-

An AI-powered financial agent might be tricked into making unauthorized transactions.

-

A research assistant AI could inadvertently leak intellectual property if connected to unsecured systems.

These risks are not hypothetical — red team exercises and early case studies show that attackers are actively probing AI systems for weaknesses.

Purpose of This Blog

In this article, we’ll explore how to secure AI agents effectively. We’ll cover the common vulnerabilities that make them attractive targets, the principles and technical measures to strengthen their defenses, the role of compliance and governance, and real-world examples of how different industries are approaching AI security.

By the end, you’ll have a roadmap for building secure, trustworthy AI agents that can deliver value without exposing your organization to unnecessary risks.

Key Security Risks of AI Agents

AI agents bring automation, efficiency, and intelligence — but they also open the door to new categories of threats. Unlike static AI models, agents are often connected to external systems (databases, APIs, email, cloud platforms) and act autonomously. This combination makes them especially vulnerable if not properly secured. Below are the major risks organizations must account for when deploying AI agents.

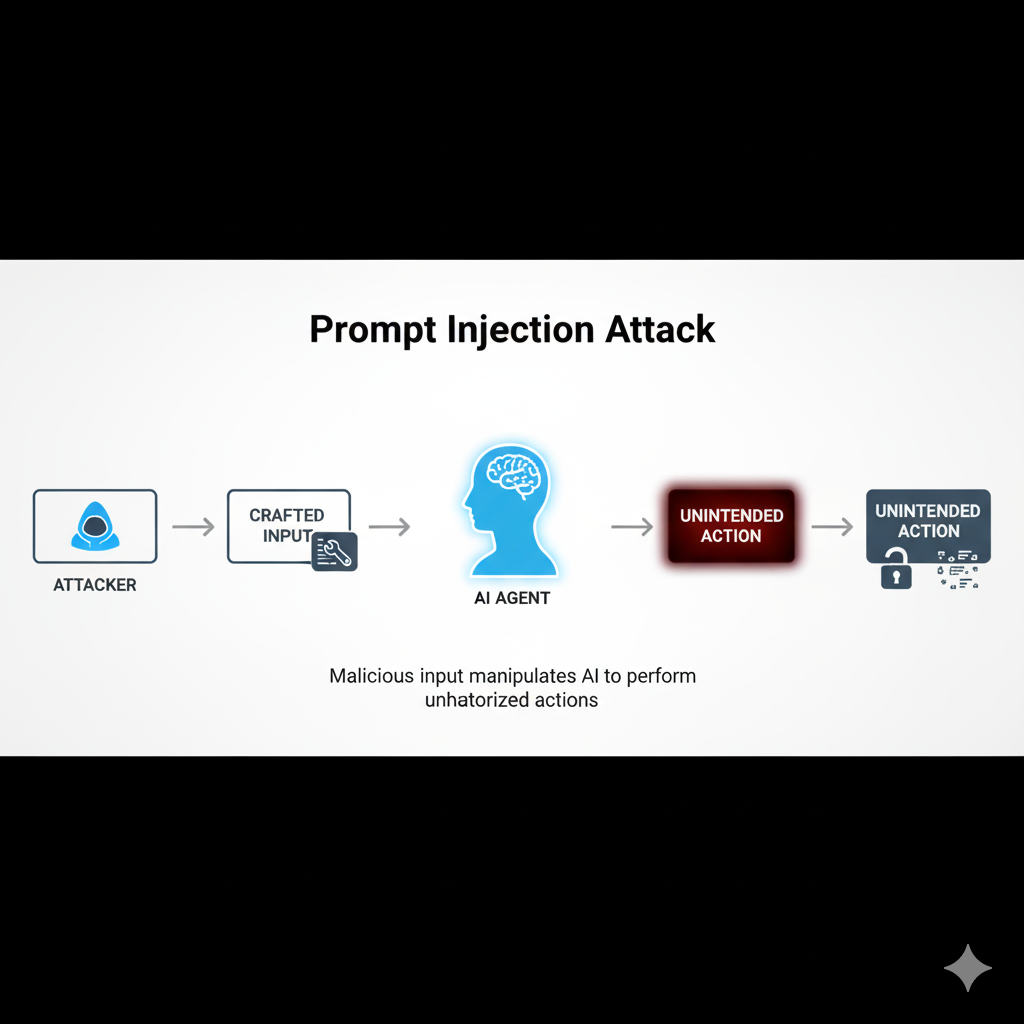

1. Prompt Injection Attacks

One of the most common vulnerabilities is prompt injection.

-

In this attack, a malicious actor crafts inputs designed to trick the AI agent into ignoring its instructions or performing unintended actions.

-

For example, an attacker could embed hidden instructions in a user query or in external content (like a webpage) that the agent processes.

-

A compromised agent might leak sensitive information, bypass safety filters, or execute unauthorized commands.

Prompt injection is similar to SQL injection in traditional systems — a small manipulation that can lead to outsized consequences.

2. Data Leakage

AI agents often handle confidential business data, customer records, or intellectual property.

-

Poorly configured agents may accidentally reveal private data to unauthorized users.

-

Some agents that “learn” from conversations risk exposing past interactions to new users.

-

If logs and conversations are stored insecurely, they become a valuable target for attackers.

This makes data minimization and strong access controls essential for safe deployment.

3. Model Manipulation and Poisoning

AI models can be deliberately poisoned by feeding them corrupted or biased training data.

-

For LLM-powered agents, attackers could attempt to insert malicious examples during fine-tuning.

-

Poisoned models may produce biased outputs, misinformation, or harmful recommendations.

-

In dynamic environments, online learning systems are particularly vulnerable to poisoning attempts.

4. Impersonation and Social Engineering

AI agents are designed to interact with humans naturally, but this strength can be exploited.

-

Attackers can impersonate legitimate users, tricking an AI agent into performing sensitive actions.

-

Conversely, malicious actors can deploy fake AI agents to impersonate trusted entities, stealing credentials or misleading users.

-

In customer service, this could result in fraud; in enterprise workflows, it could lead to unauthorized access.

5. Over-Delegation of Authority

Because AI agents are autonomous, they often have direct access to tools like email, calendars, payment systems, or databases.

-

If an attacker gains control, they can misuse these integrations.

-

Even without attackers, an agent acting on incomplete or misinterpreted data could unintentionally cause damage.

-

Examples include sending unauthorized payments, deleting files, or sharing sensitive documents.

6. Misuse by Malicious Actors

Not all threats come from outsiders — AI agents themselves can be weaponized.

-

Cybercriminals could configure agents to automate phishing, misinformation, or malware generation at scale.

-

An unsecured AI agent in the wild could become a tool for spam campaigns or cyberattacks.

The risks of AI agents are diverse: they can be tricked, misled, impersonated, poisoned, or hijacked. Because these systems act autonomously and often hold privileged access, even small vulnerabilities can lead to serious breaches. Understanding these risks is the first step toward securing AI agents and building user trust.

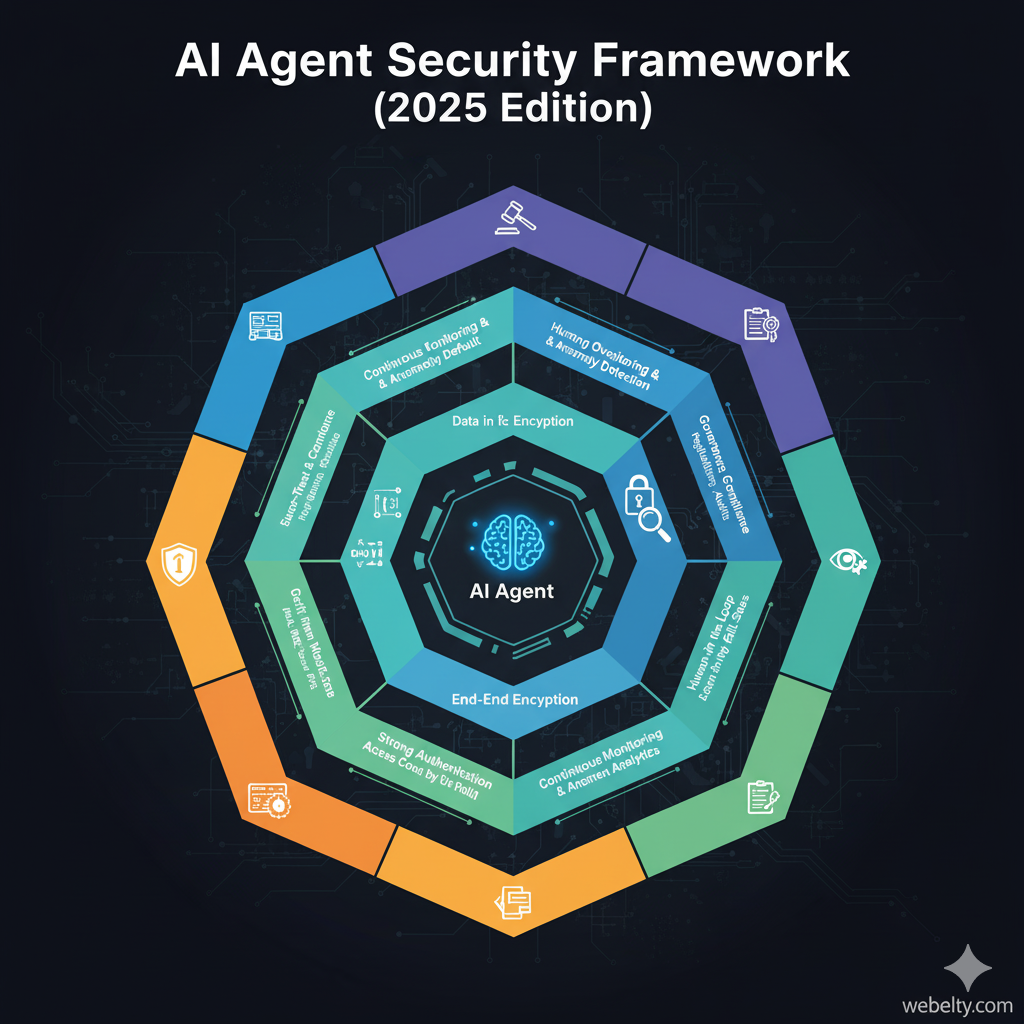

Core Principles of Securing AI Agents

Securing AI agents is not just about adding firewalls or monitoring logs. Because these systems act autonomously, interact with external data, and access sensitive resources, they require a multi-layered security approach. The following principles form the foundation of AI agent security in 2025 and beyond.

1. Zero-Trust Security Model

The traditional model of assuming trust within a system no longer works for AI agents. A zero-trust approach means:

-

Every request, user, or external input is verified, regardless of origin.

-

AI agents are not given blanket access to systems — instead, they receive least-privilege permissions limited to their current task.

-

Continuous authentication and monitoring ensure that access is revoked if suspicious behavior is detected.

This reduces the chance that a compromised agent can cause widespread damage.

2. Strong Authentication and Access Control

AI agents often integrate with sensitive systems like CRM platforms, payment gateways, or email servers. To secure this access:

-

Enforce multi-factor authentication (MFA) for agent credentials.

-

Use API keys, tokens, and role-based access controls (RBAC) to limit functionality.

-

Rotate credentials frequently and never hard-code them into prompts or scripts.

This ensures that even if an attacker compromises the agent, they cannot easily escalate privileges.

3. Encryption of Data in Transit and at Rest

AI agents frequently process sensitive data, from medical records to financial transactions. Encryption is non-negotiable:

-

TLS (Transport Layer Security) for all data transmissions.

-

AES-256 or equivalent encryption for stored logs, training data, and outputs.

-

Tokenization or anonymization to minimize exposure of personally identifiable information (PII).

Encryption prevents attackers from intercepting or exploiting sensitive information.

4. Monitoring and Anomaly Detection

AI agents should be continuously observed for unusual activity.

-

Implement real-time monitoring of queries, outputs, and system access.

-

Use anomaly detection systems to flag abnormal behaviors (e.g., a spike in API calls, unusual file access).

-

Maintain audit logs to trace every action taken by an AI agent for forensic analysis.

This helps detect attacks early and supports incident response.

5. Regular Updates and Model Patching

AI models and their integrations must be maintained like any other software:

-

Patch vulnerabilities in underlying frameworks, APIs, and libraries.

-

Regularly update LLMs to benefit from improved safety features and mitigations against new attack methods.

-

Apply adversarial training and red teaming results to continuously strengthen security.

A stagnant AI agent becomes an easy target for evolving threats.

6. Human Oversight and Fail-Safes

No AI system should operate in complete isolation, especially in critical environments.

-

Implement human-in-the-loop oversight for sensitive tasks (e.g., approving financial transactions).

-

Define kill-switch mechanisms to shut down or isolate an agent if it behaves unexpectedly.

-

Establish clear escalation protocols when anomalies are detected.

Human oversight ensures accountability and provides a final safeguard against AI errors or malicious manipulation.

The core principles of AI agent security are about trust minimization, data protection, and active oversight. By applying zero-trust, enforcing strong authentication, encrypting data, monitoring continuously, patching regularly, and keeping humans in the loop, organizations can build AI agents that are secure, reliable, and resilient against emerging threats.

Technical Measures to Secure AI Agents

While core principles set the foundation for AI agent security, organizations also need practical, technical safeguards to prevent real-world exploits. These measures ensure that AI agents can operate autonomously without becoming weak links in the security chain.

1. Input Validation and Prompt Filtering

AI agents are especially vulnerable to prompt injection attacks where malicious instructions are hidden in user input or external data.

-

Implement input sanitization to filter harmful instructions before they reach the model.

-

Maintain blocklists and allowlists for restricted commands or sensitive data queries.

-

Apply output constraints so the agent cannot execute actions beyond its intended scope.

This prevents attackers from manipulating an agent into leaking data or bypassing its safety rules.

2. Rate Limiting and Usage Monitoring

AI agents must be protected from abuse through excessive or malicious requests.

-

Use rate limiting to cap the number of queries per user or per time interval.

-

Monitor for unusual activity, such as sudden spikes in requests or attempts to extract sensitive data.

-

Implement throttling mechanisms to slow down or block suspicious traffic.

This helps prevent denial-of-service scenarios and brute-force style attacks against AI systems.

3. Sandbox and Segmented Environments

AI agents should never have unrestricted access to enterprise systems.

-

Run them in sandboxed environments where they can execute tasks without risking the core network.

-

Use segmentation to separate sensitive databases, files, and applications from the AI agent’s operational scope.

-

Allow only pre-approved connectors (e.g., APIs with scoped permissions) for system access.

By isolating the agent, organizations minimize the blast radius if something goes wrong.

4. Red Teaming and Adversarial Testing

AI agents require continuous security testing, just like traditional software.

-

Conduct red teaming exercises where security experts attempt to break or mislead the agent.

-

Test against adversarial examples designed to exploit model weaknesses.

-

Perform regular penetration testing of APIs and integrations.

These proactive steps reveal vulnerabilities before malicious actors can exploit them.

5. Secure APIs and Integration Protocols

AI agents frequently rely on APIs to perform tasks like sending emails, retrieving data, or making transactions.

-

Use API gateways with authentication, rate limits, and monitoring.

-

Apply OAuth 2.0 and token-based access controls for secure integrations.

-

Ensure all API communications are encrypted using TLS 1.3 or stronger.

Poorly secured APIs are one of the easiest ways for attackers to compromise an AI agent.

6. Logging and Traceability

For accountability, every action an AI agent takes should be logged and auditable.

-

Keep detailed logs of inputs, outputs, and actions triggered.

-

Store logs securely with encryption to prevent tampering.

-

Enable traceability features to quickly identify the source of suspicious behavior.

Logging supports both real-time monitoring and post-incident investigations.

Technical security measures are the practical armor that keeps AI agents safe from real-world threats. From input validation and sandboxing to secure APIs and continuous red teaming, these defenses ensure that agents operate within strict boundaries, minimizing risks of data leakage, misuse, or unauthorized access.

Governance, Compliance & Ethical Safeguards

Technology alone cannot fully secure AI agents. Because these systems handle sensitive data, make semi-autonomous decisions, and interact with people, organizations must implement governance frameworks, compliance policies, and ethical safeguards. Together, these create the trust foundation for secure and responsible AI use.

1. Aligning with Regulations

AI is increasingly regulated, and AI agents must comply with emerging laws:

-

EU AI Act (expected 2026): Classifies high-risk AI systems and mandates strict compliance on transparency, risk management, and human oversight.

-

NIST AI Risk Management Framework (U.S.): Provides guidelines for trustworthy AI, including safety, security, and explainability.

-

ISO/IEC Standards: Ongoing work includes global standards on AI risk, transparency, and governance.

Organizations deploying AI agents in finance, healthcare, or government must ensure compliance or face penalties that can reach millions of dollars or 6% of annual revenue.

2. Data Privacy and Minimization

AI agents should follow a privacy-first design to protect users and avoid legal risks:

-

Collect and process only the data necessary to complete tasks (data minimization).

-

Apply anonymization or pseudonymization when storing or processing sensitive data.

-

Give users transparency and control over how their information is used, stored, and shared.

This ensures compliance with GDPR, HIPAA, and other global privacy laws.

3. Accountability and Human Oversight

No AI agent should operate in a black box:

-

Establish human-in-the-loop mechanisms for high-stakes decisions (e.g., financial transactions, medical advice).

-

Document agent behavior and decision-making processes to provide audit trails.

-

Appoint AI governance committees to review and approve deployments.

Accountability reduces the risk of AI agents making harmful or biased decisions without supervision.

4. Ethical Safeguards

Ethics go beyond compliance — they ensure AI agents act responsibly:

-

Bias Mitigation: Continuously test agents for biased or discriminatory outputs.

-

Explainability: Strive to make AI agent decisions interpretable for users.

-

Fair Use Policies: Prevent misuse of AI agents for harmful purposes such as misinformation or fraud.

-

Transparency: Communicate clearly when users are interacting with an AI agent versus a human.

These safeguards build trust with customers and regulators, making adoption more sustainable.

5. Risk Management Frameworks

Adopting structured frameworks ensures consistency:

-

Conduct regular risk assessments to identify vulnerabilities.

-

Use threat modeling to anticipate how AI agents could be attacked.

-

Maintain incident response plans for AI-related breaches.

Integrating AI agent security into existing DevSecOps pipelines ensures risks are managed from development through deployment.

Governance, compliance, and ethics are not optional extras — they are core pillars of securing AI agents. By aligning with global regulations, protecting data privacy, ensuring accountability, and embedding ethical safeguards, organizations can deploy AI agents that are not only effective but also trustworthy and compliant.

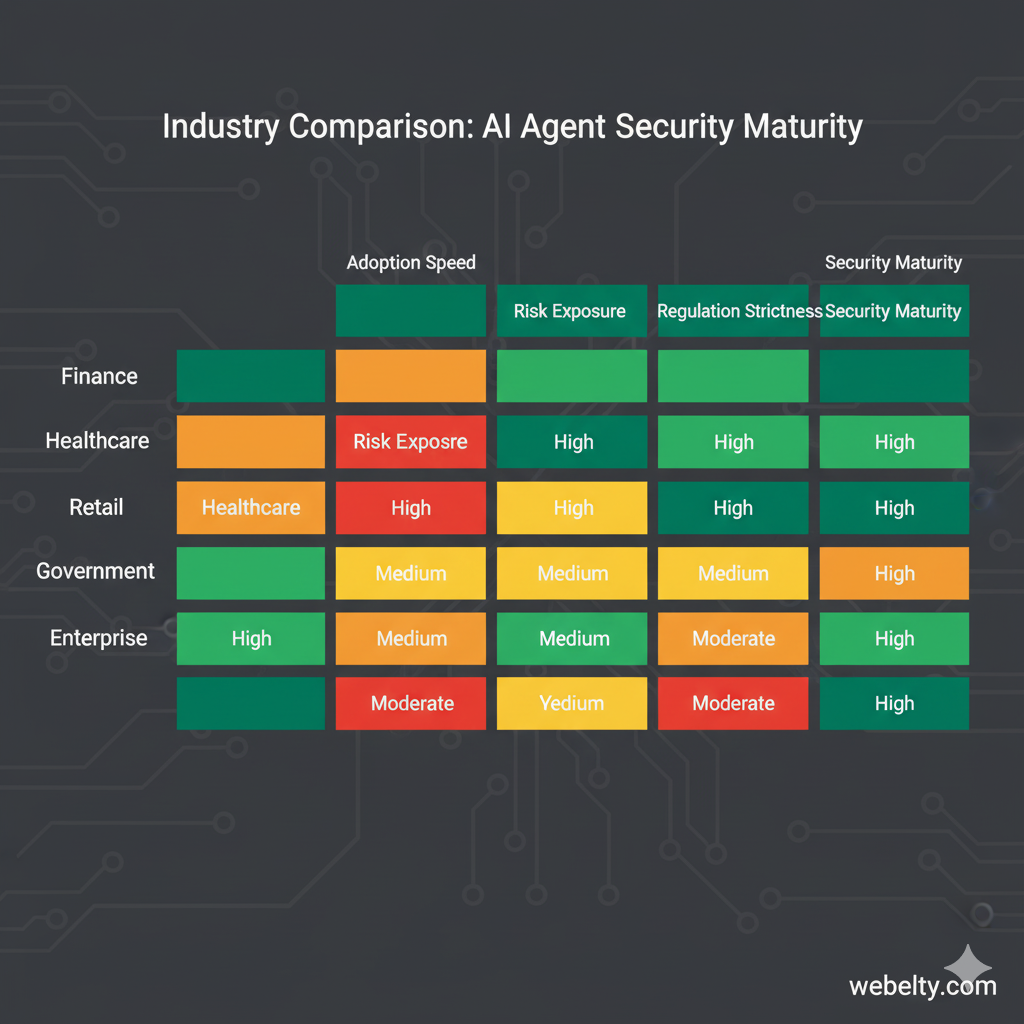

Industry Use Cases of Securing AI Agents

AI agents are being deployed across industries to streamline operations, improve customer service, and enhance decision-making. But each sector faces unique risks, making security strategies highly context-specific. Below are real-world examples of how different industries are approaching the challenge of securing AI agents.

Finance and Banking

Financial services are among the earliest adopters of AI agents, using them for fraud detection, customer engagement, and transaction automation.

Risks:

-

Fraudsters can exploit weak authentication to trick agents into executing unauthorized transfers.

-

Prompt injection attacks may lead to exposure of sensitive financial data.

Security Measures:

-

Multi-factor authentication (MFA) for any AI-driven transactions.

-

Real-time transaction monitoring systems to flag anomalies.

-

Deploying agents in sandboxed environments before connecting to live payment systems.

Impact: Secure AI agents in finance reduce fraud losses, improve customer trust, and help banks meet strict compliance standards.

Healthcare

Healthcare AI agents support clinical decision-making, automate patient triage, and assist with medical documentation.

Risks:

-

Exposure of electronic health records (EHRs) due to insufficient data encryption.

-

Malicious actors exploiting agents for unauthorized access to patient data.

Security Measures:

-

End-to-end encryption of patient interactions.

-

Strict adherence to HIPAA and GDPR requirements.

-

Human-in-the-loop validation for high-stakes outputs like diagnosis support.

Impact: By securing healthcare AI agents, hospitals and providers can leverage automation while maintaining patient privacy and regulatory compliance.

Customer Service and Retail

Retailers and service providers are deploying AI agents for customer engagement, personalized recommendations, and automated support.

Risks:

-

Agents manipulated into disclosing personal customer data.

-

Impersonation attacks where fake AI agents pretend to represent a brand.

Security Measures:

-

User identity verification before processing sensitive queries.

-

Transparency protocols to inform users when they are speaking to an AI agent.

-

Logging and monitoring to detect unusual agent behaviors.

Impact: Secure AI agents help retailers reduce costs while maintaining customer trust in digital interactions.

Enterprise Automation

Organizations are integrating AI agents into back-office workflows, from HR to IT support.

Risks:

-

Over-delegation of authority, where agents gain access to sensitive enterprise systems.

-

Poorly secured integrations leading to data leaks.

Security Measures:

-

Role-based access controls (RBAC) restricting agent privileges.

-

Segmented environments to isolate sensitive business data.

-

Kill-switch mechanisms allowing admins to shut down an agent instantly if it misbehaves.

Impact: Secure enterprise AI agents improve efficiency without exposing organizations to insider or outsider threats.

Government and Public Services

Governments are testing AI agents for citizen services, from tax support to legal aid.

Risks:

-

Risk of disinformation if agents provide inaccurate or manipulated advice.

-

Attacks targeting citizen data stored in government systems.

Security Measures:

-

Rigorous red teaming and adversarial testing before deployment.

-

Clear accountability frameworks to ensure human oversight.

-

Data minimization policies to prevent over-collection of sensitive information.

Impact: Properly secured AI agents can make public services faster and more accessible while safeguarding national security and citizen trust.

Across industries, the risks vary — but the principle is the same: an unsecured AI agent can cause real financial, legal, and reputational damage. By applying tailored security strategies, organizations can safely unlock the benefits of AI automation without compromising trust or compliance.

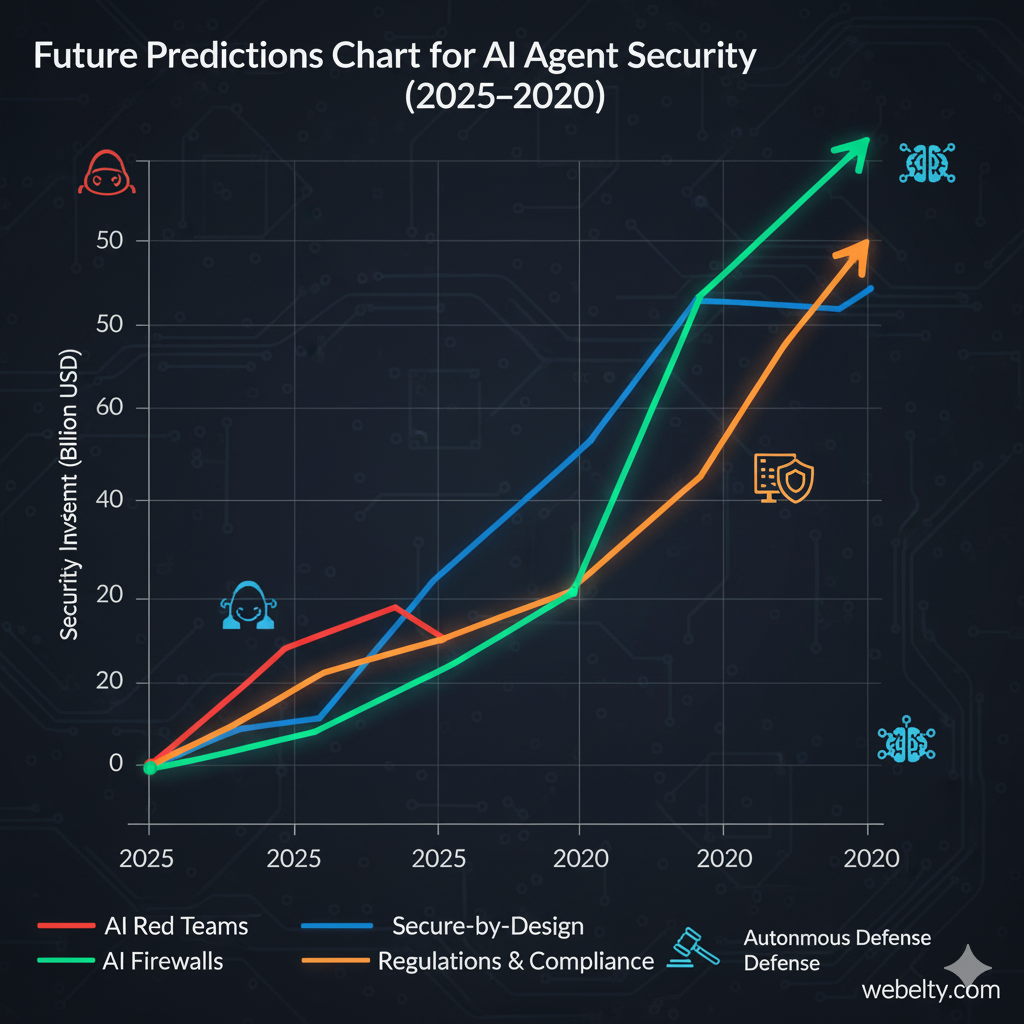

Future of AI Agent Security

As AI agents continue to evolve, so too will the methods to protect them. The next five years will see a shift from today’s basic security add-ons to fully integrated AI-native security frameworks. The future of AI agent security will be defined by advanced tools, proactive risk management, and global regulations that shape how these systems operate.

1. Growth of AI Red Teams

Organizations are already experimenting with AI red teaming, where ethical hackers simulate attacks to expose vulnerabilities in AI agents.

-

By 2026, AI red teams are expected to become standard practice in large enterprises.

-

These teams will test against prompt injection, data leakage, impersonation, and adversarial attacks.

-

Insights will feed into continuous model improvements and policy updates.

This proactive approach will replace reactive “patch after breach” strategies.

2. AI Firewalls and Security Layers

Just as networks rely on firewalls, AI agents will be shielded by specialized AI firewalls.

-

These systems will monitor and filter both inputs and outputs in real time.

-

They’ll block malicious prompts, prevent data exfiltration, and enforce compliance with predefined rules.

-

By 2027, it’s expected that AI firewalls will be as common as web application firewalls (WAFs) in enterprise environments.

3. Secure-by-Design AI Frameworks

Instead of bolting on security, future AI agents will be built with secure-by-design principles:

-

Models will undergo security testing during development, not just post-deployment.

-

Training pipelines will include bias checks, poisoning defenses, and adversarial robustness tests.

-

Developers will have toolkits for embedding encryption, logging, and compliance features directly into the agent lifecycle.

This shift mirrors the DevSecOps movement in traditional software.

4. Regulatory and Compliance Evolution

AI security will increasingly be shaped by laws and standards:

-

The EU AI Act, expected to take effect by 2026, will mandate robust security and transparency for high-risk AI systems.

-

Other regions (U.S., Asia-Pacific) are drafting their own AI governance frameworks.

-

Industry-specific standards (finance, healthcare, defense) will add another layer of requirements.

By 2030, AI agent security may be governed by a global baseline standard, much like today’s ISO standards for cybersecurity.

5. Convergence with Cybersecurity Tools

AI agent security will not remain siloed. Instead, it will integrate into broader cybersecurity systems:

-

AI activity logs will feed into SIEM platforms for centralized monitoring.

-

AI-specific alerts will be part of SOC workflows.

-

Security orchestration tools will automatically respond to AI-related anomalies, isolating compromised agents in real time.

6. Towards Autonomous Defense

Ironically, AI itself will play a bigger role in defending AI.

-

Self-monitoring AI agents will identify and block suspicious activity.

-

Defensive AI systems will learn from attack attempts and automatically adapt protections.

-

This evolution could lead to a future of AI agents protecting AI agents, reducing human dependency for first-line defenses.

The future of AI agent security lies in proactivity, automation, and regulation. With red teams, AI firewalls, secure-by-design frameworks, and global standards, securing AI agents will evolve from an afterthought to a strategic discipline. The ultimate goal: build AI agents that are not only intelligent and autonomous but also trustworthy, resilient, and self-defending.

Conclusion

AI agents are no longer futuristic concepts — they are rapidly becoming an integral part of business operations, customer experiences, and even government services. From handling support tickets to automating financial workflows, these systems promise enormous productivity gains. But with this autonomy comes risk. An unsecured AI agent is not just a weak link; it can become a gateway for data breaches, fraud, and misuse.

The risks are clear: prompt injection attacks that trick agents into bypassing safeguards, data leaks exposing sensitive information, model poisoning that corrupts outputs, impersonation schemes that erode trust, and malicious use cases that weaponize AI itself. These threats demonstrate why security cannot be treated as an afterthought.

To meet these challenges, organizations must adopt a layered approach to AI agent security. Core principles like zero-trust architecture, encryption, monitoring, and human oversight lay the foundation. Technical measures such as input validation, sandboxing, secure APIs, and red teaming provide practical defenses. Beyond technology, strong governance and compliance frameworks ensure accountability, while ethical safeguards build long-term trust with users and regulators.

Industry use cases further prove that securing AI agents is not optional. In finance, it prevents fraud and ensures regulatory compliance. In healthcare, it protects patient data and supports safe automation. In retail, it preserves customer trust. In government, it strengthens national security and protects citizens. Across all sectors, the ability to secure AI agents is directly tied to reputation, compliance, and competitiveness.

Looking forward, AI agent security will continue to mature. Red teams, AI firewalls, and secure-by-design frameworks will become industry standards. Regulations such as the EU AI Act will enforce stronger safeguards, while autonomous defense systems powered by AI will protect agents in real time.

Final Thought

The future of AI agents is bright, but only if organizations approach them with the same rigor as any other critical infrastructure. Securing AI agents is about more than avoiding breaches — it is about enabling trust, innovation, and sustainable growth. Businesses that act today to embed strong security will be best positioned to harness the full potential of AI agents tomorrow.

FAQs

Q1: What are AI agents and why do they need security?

AI agents are autonomous systems powered by AI/LLMs that can perform tasks and interact with data. They need security to prevent misuse, data leaks, and attacks.

Q2: What are the biggest security risks for AI agents?

Key risks include prompt injection attacks, data leakage, model poisoning, impersonation, and over-delegation of authority.

Q3: How can I secure AI agents at a technical level?

Use input validation, sandbox environments, secure APIs, rate limiting, logging, and continuous red teaming to protect AI agents from threats.

Q4: What governance measures help secure AI agents?

Compliance with regulations (EU AI Act, GDPR, HIPAA), bias testing, accountability frameworks, and human-in-the-loop oversight strengthen security.

Q5: What is the future of AI agent security?

AI firewalls, red teaming, secure-by-design frameworks, and global regulations will define the future. Autonomous defense systems may allow AI to protect AI.