Deepfake identity fraud has rapidly emerged as one of the most dangerous threats to the global KYC ecosystem used by banks, fintech platforms, lending companies, insurance providers, and cryptocurrency exchanges. The rise of powerful generative AI tools has enabled criminals to create synthetic faces, alter real faces, fabricate identity documents, and even produce fully interactive deepfake liveness videos that can bypass automated verification systems with surprising ease.

Financial institutions have traditionally relied on face matching, document verification and video based liveness checks. Deepfake technology can now defeat all three. Criminals are no longer stealing identities. They are manufacturing identities. This evolution changes the nature of fraud because synthetic identities do not belong to real victims which makes detection significantly harder and disputes almost impossible.

The black market economy has already adapted by offering Deepfake as a Service packages where fraudsters can purchase ready made KYC videos or order custom deepfake models built specifically for a target institution. This blog explores the underground ecosystem powering deepfake identity fraud, the end to end workflow of deepfake KYC attacks, the failure points inside current KYC systems, and the future technologies that will protect financial institutions against synthetic identity fraud.

How Deepfake KYC Attacks Begin With Identity Harvesting or Synthetic Identity Creation

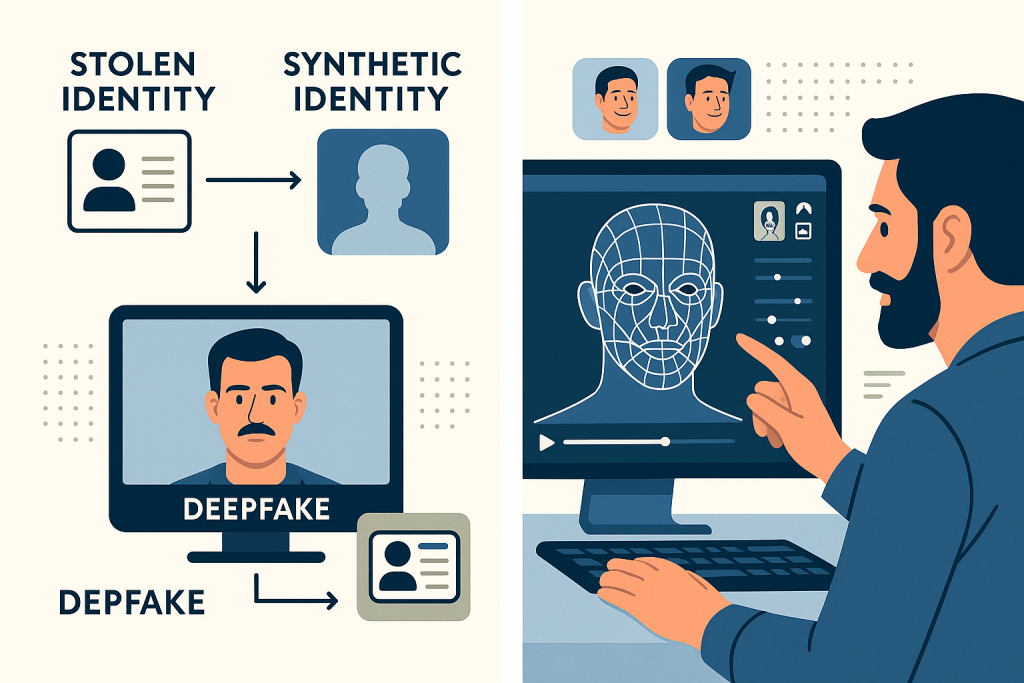

The entire deepfake fraud pipeline begins with establishing a usable identity. Criminals either harvest stolen identities or generate synthetic identities from scratch.

Stolen identities usually come from

• Massive data breaches involving banks, telecom companies or KYC vendors

• Social media scraping where criminals gather high resolution images of real people

• Hacked email accounts containing passport scans or driver license photos

• Darknet marketplaces selling identity packs including selfies and ID documents

Criminals take these images and create a facial model that can be animated. The victim is often unaware because the deepfake appears in a system they have never used.

Synthetic identities are far more dangerous because

• There is no real person behind them

• Banks cannot cross check the identity with existing databases

• Synthetic customers pass most AI based risk scoring

• Lenders and fintechs approve these identities because they appear legitimate

A synthetic identity often includes

• A fully AI generated human face

• A matching fake ID card created with AI document tools

• A fabricated date of birth and address

• A synthetic credit profile

• An AI generated voice sample

Synthetic identity fraud is already one of the fastest growing forms of financial crime and deepfake tools are accelerating the problem.

This foundational identity then moves into the deepfake training pipeline.